Before we start the topic, let’s go with one basic question!

We’re using Terraform to create and update AWS resources, Every time we run terraform plan or terraform apply, Terraform able to find the resources it created previously and update them accordingly. But how did Terraform know which resources it was supposed to manage?

Let’s understand first, what is Terraform State?

Every time you run Terraform, it records information about what infrastructure it created in a Terraform state file. This file is in JSON format & have mapping from terraform resources.

Let’s check it by an example.

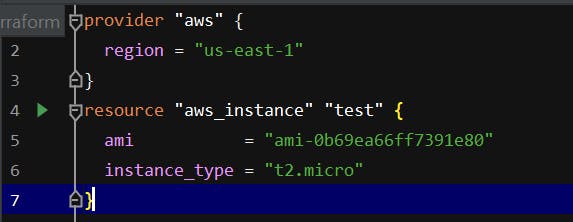

Here I have created a simple terraform file which will create an EC2 instance.

Terraform file for EC2 Instance

Terraform file for EC2 Instance

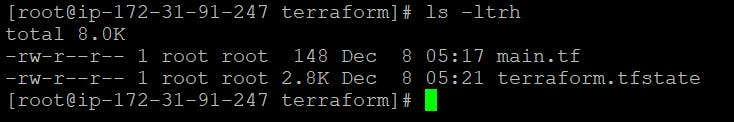

Right now, we have only one main.tf in our directory.

Let’s run terraform init & terraform apply & check our directory. So after running terraform apply, we can see one more file in our directory i.e terraform.tfstate

When we open that file, we see a long JSON format containing data. Now we go a little back & I had asked how terraform knows that which resource it needs to create/update?

terraform.tfstate

terraform.tfstate

So Using this JSON format, Terraform knows that a resource with type aws_instance and name “test” corresponds to an EC2 Instance Every time you run Terraform, it can fetch the latest status of this EC2 Instance from AWS and compare that to what’s in your Terraform configurations to determine what changes need to be applied.

This is about Terraform state but one question comes into mind! If Terraform is using by you only then storing state in your local is fine but what if there are multiple peoples handling the projects as a Team, at that time, you’ll be run in problem. What kind of problems? Let’s look!

1- What if every team members needs access to the same Terraform state files. That means you need to store those files in a shared location.

2- In case two peoples are running Terraform at the same time, which can lead to conflicts & data loss.

So, The most common technique for allowing multiple team members to access a common set of files is to put them in version control system i.e GIT. But there is some disadvantages to use GIT. Most version control systems do not provide any form of locking that would prevent two team members from running terraform apply on the same state file at the same time.

Instead of using version control, the best way to manage shared storage for state files is to use Terraform’s built-in support for remote backends. A number of remote backends are supported, including Amazon S3, Azure Storage, Google Cloud Storage etc.

Remote backends solve many issues like Manual Error, Locking, Secrets etc.

We’re using Terraform with AWS, so Amazon S3 (Simple Storage Service) as a remote backend. As we know about S3, it’s manged service, 9.999999999% durability and 99.99% availability, also supports encryption, versioning, locking via DynamoDB etc.

So, let’s dig out into remote state storage with Amazon S3. We’ll go step by step for remote state storage. I’m using Pycharm to write code in Terraform.

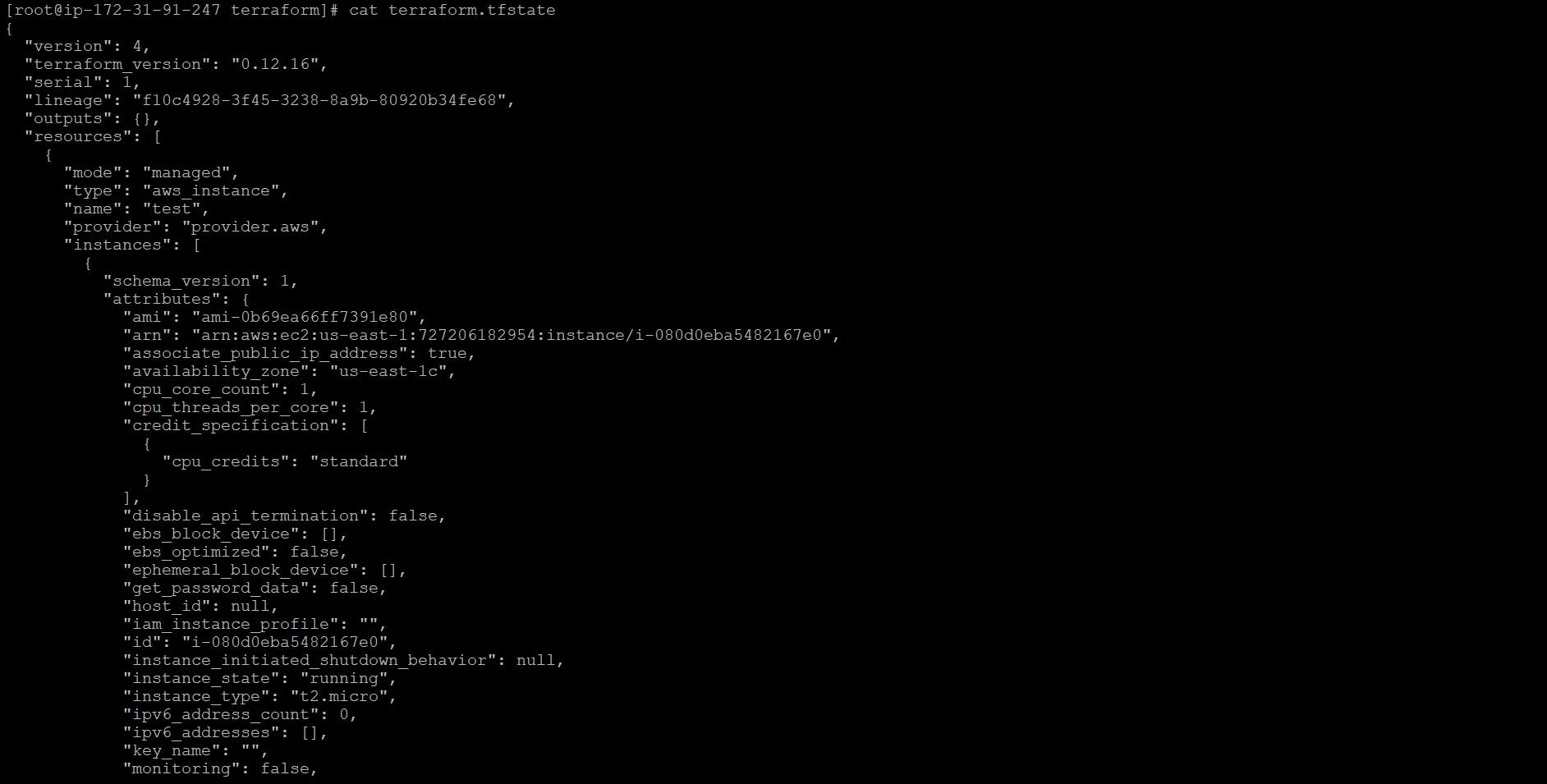

Task-1 Create a S3 bucket:

main.tf — Create S3 Bcuket

main.tf — Create S3 Bcuket

This is terraform code to create S3 Bucket, let’s explain all the blocks of code.

Bucket: This is the name of the S3 bucket & name should be unique globally.

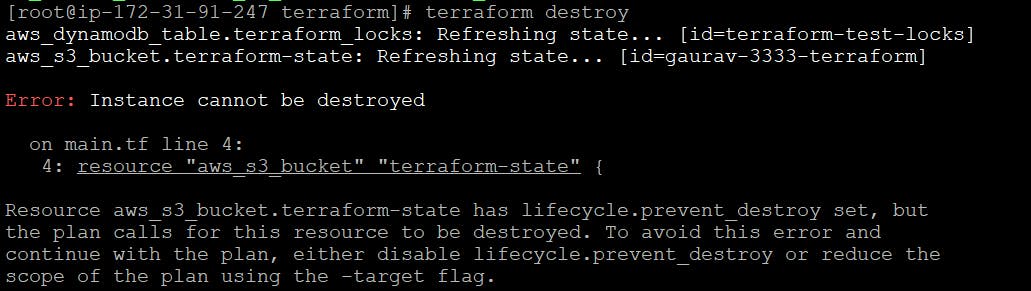

Prevent Destroy: Value sets to true to prevent it from accidentally delete that resource, when someone will run terraform destroy, it will throw an error.

terraform destroy

terraform destroy

Versioning: By enabling versioning on the S3 bucket so that every update to a file in the bucket actually creates a new version of that file.

Server_side_encryption_configuration: Secrets always encrypted on disk when stored in S3.

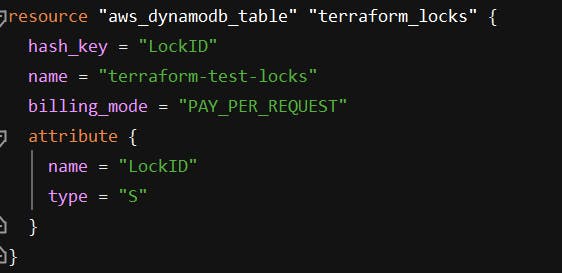

Task-2 Create a DynamoDB table to use for locking

We need to create DynamoDB table that will create a lock, so that two developer doesn’t update same resource simultaneously in Terraform.

DyanmoDB Table Creation

DyanmoDB Table Creation

hash_key: The attribute to use as the hash (partition) key.

name: The name of the table, this needs to be unique within a region.

billing_mode: Controls how you are charged for read and write throughput & it’s optional.

attribute: List of nested attribute definitions for hash key.

name: The name of the attribute.

type: Attribute type, which must be a scalar type: S, N, or B for String, Number or Binary data.

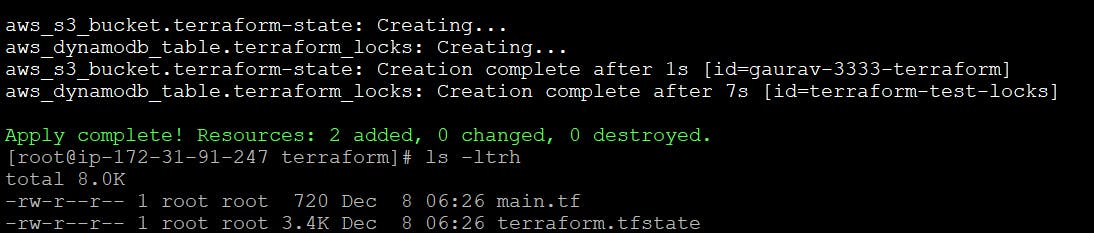

Let’s run the code. terraform init & terraform apply

terraform init & apply — Created a S3 Bcuket & DynamoDb Table

terraform init & apply — Created a S3 Bcuket & DynamoDb Table

Now, we’re done with code, when we’ll run terraform init (to download the provider code) & terraform apply (to deploy code) but 1 second, when we’ll deploy the code, your Terraform state will still be stored locally. We want to store in remote back-end i.e AWS S3.

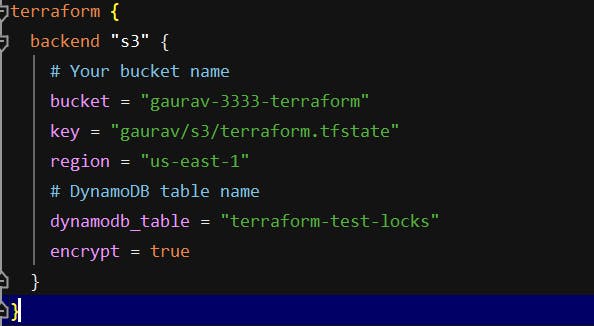

Task-3 Back-end Configuration

To configure Terraform to store the state in your S3 bucket, we need to add a backend configuration to your Terraform code.

Note: This is configuration for Terraform itself, so it resides within a terraform block.

Back-end Configuration

Back-end Configuration

We know about all the above attributes in back-end configuration except key.

key: The Terraform state is written to the key, The path to the state file inside the bucket.

Now after adding the back-end configuration, we’ll again run the code.

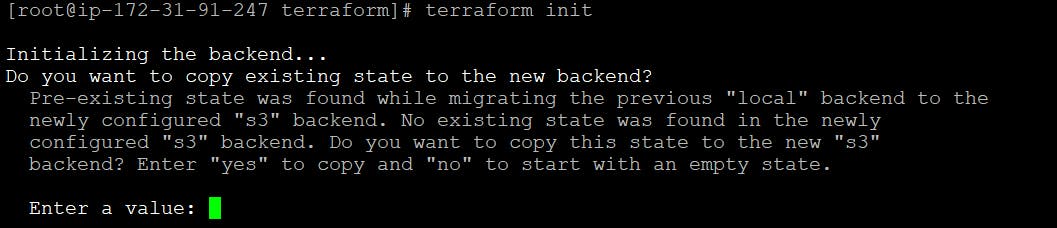

terraform init

Terraform will automatically detect that you already have a state file locally and prompt you to copy it to the new S3 backend. Type yes.

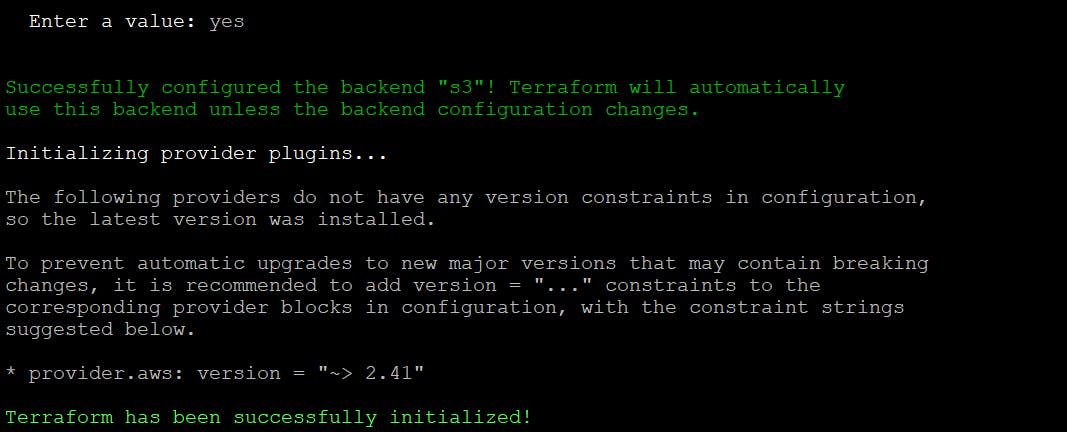

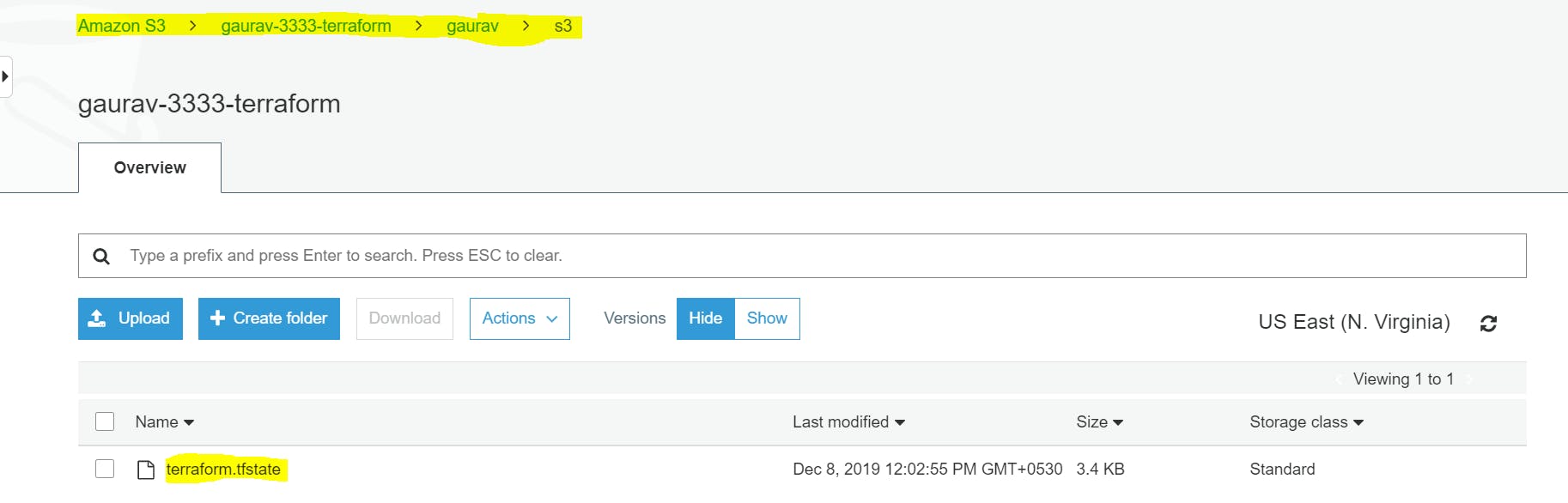

After running init command, your Terraform state will be stored in the S3 bucket. Let’s check in console.

S3 Bucket

S3 Bucket

With this backend enabled, Terraform will automatically pull the latest state from this S3 bucket before running a command, and automatically push the latest state to the S3 bucket after running a command. Isn’t cool :D

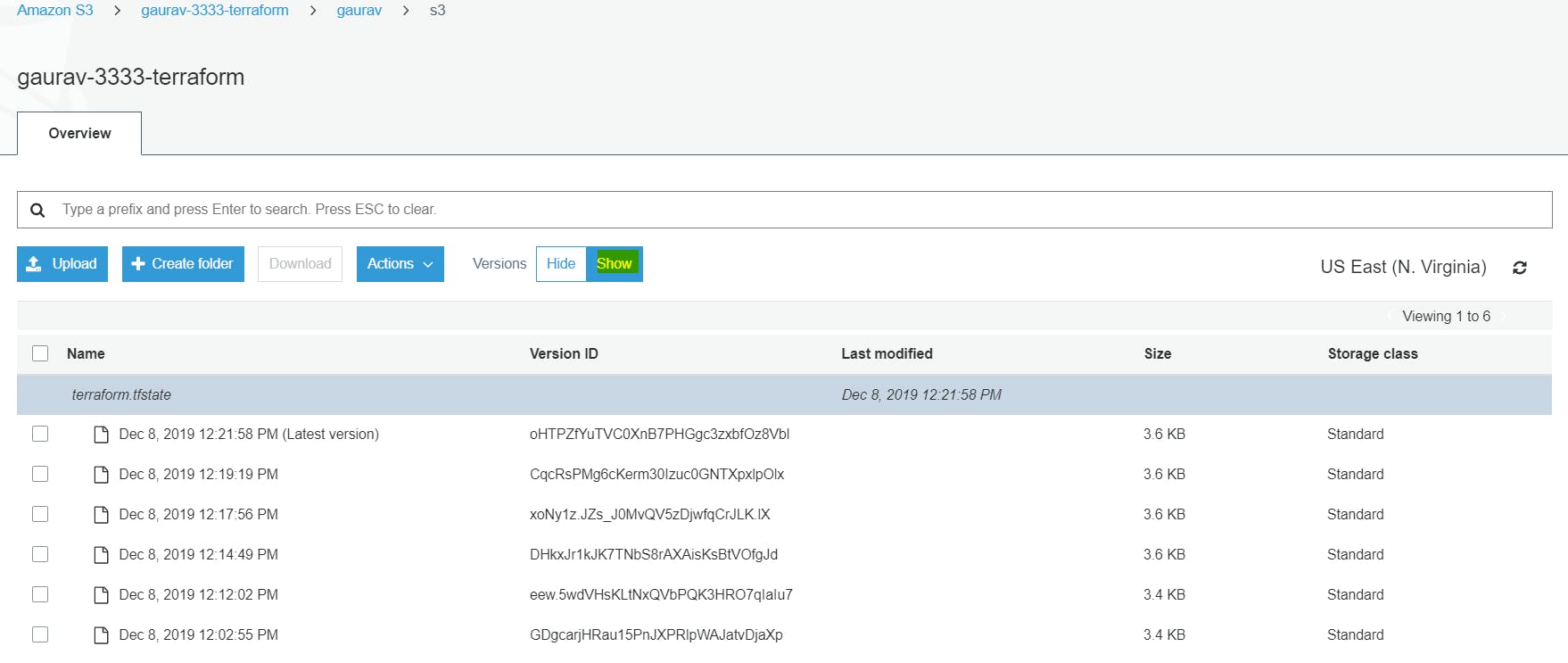

Let’s add above output.tf in your code & run terraform apply.

Multiple Versions of state file

Multiple Versions of state file

Now you ca see multiple version of your state file. Terraform is automatically pushing and pulling state data to and from S3.

Isn’t cool feature in Terraform?